Overview

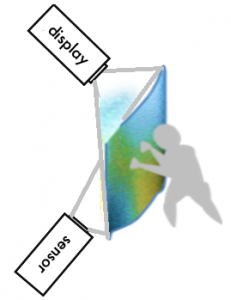

The thought behind the ActiveCourtain was to relate the feel of one’s body touching the material to color change where the child presses. The physical design artifact is basically a backlit projection of colored surface into a soft screen. A Microsoft® Kinect sensor is located behind the soft screen, detecting the location and depth of the “press” (See figure to the right).

There has been several iterations of ActiveCurtain. The first three versions took the depth values directly from the kinect sensor and assigned different colors to different depth-ranges, creating a multi-colored layer projection in the curtain. The fourth version detected the dent in the curtain as a ”blob” and used the information to project different animations. The fifth version used the same method and added extra sound layer to the interaction. OpenFrameworks 0.0071 for visual studio 2010 was used with OpenCV and kinect sensor addins to detect the curtain’s depth and project it back to the curtain.

ActiveCurtain (Versions 0.1 – 0.3)

On the first three versions of the ActiveCourtain (see image below), a colored and thresholded depth image of the Kinect is projected back to the screen. Therefore, pressing the surface of the screen changes the color of the indented area by an amount of thresholded steps, depending on the depth of the touch. A spherical version was developed, which considers more elaborate temporal and spatial behaviors.

ActiveCurtain (Version 0.4)

Based on the observed interactions and the deliberations with the staff, ways to make the design more inviting for interplays around simultaneous interaction were developed. The second version of ActiveCurtain uses multipoint detection to send TUIO messages to any TUIO clients, which can project different animations (and sounds) to the ActiveCurtain’s surface, depending on the type and degree of multi-touch interaction.

TUIO tracker

As a TUIO tracker program, we modified Patricio Gonzalez Vivo’s KinectCoreVision tracker. Which allowed us to calibrate and adjust the blob tracking parameters and send them via TUIO to our client application.

TUIO client

In this case we used a Processing Program as a TUIOclient. Memo Akten’s MSAFluid for processing was used to display real-time 2D fluid simulations in the curtain. This allowed to project different fluid animations depending on the multi-touch blob coordinates and size.

Materials:

- An IKEA coat rack or similar that will serve as a frame

- Semi-transparent white sheet

- 1 proyector

- 1 kinect

Software:

The executable files to run ActiveCurtain can be found here.

The requirements to run the hugbag programs are:

- MS Kinect SDK or OpenNi drivers

- Java runtime

The code for versions 0.3 to 0.5 of ActiveCurtain can be found here. To compile the code, the following is needed:

- OpenFrameworks for visual studio 2010

- Kinect OpenNI or MS Kinect SDK drivers

- Processing IDE

For version 0.5 the following is needed for the Audio to work:

- Ableton Live 9.0 Suite

- Cycling 74 MAX runtime

- Virtual MIDI cable (LoopMIDI)